Artificial intelligence technology is being regulated worldwide. Regulatory bodies have either proposed or finalized documents emphasizing outright restrictions, conscientious development, and approval processes. The different focuses across multiple geographies create a patchwork of regulatory complexity for third-party risk managers and cybersecurity professionals seeking to strike a balance between efficiency gains and responsible development.

This post will examine AI regulatory developments and guidelines from the U.S., the U.K., the European Union, and Canada. Additionally, we will look at each regulation’s impact on the third-party risk management landscape.

The European Union Artificial Intelligence Act

On August 1, 2024, the EU AI Act came into force. This legislation makes European regulators the first to have comprehensive laws regulating generative AI and any future AI developments. This is not an unusual place for Europe to be. In 2016, they were the first to have comprehensive data privacy rules with the General Data Protection Regulation (GDPR). The AI Act marks the bloc’s continuation of leading the world with example regulations.

The European Union’s Artificial Intelligence Act, officially the “Regulation of the European Parliament and of the Council Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts,” was originally proposed in 2021.

The rules in this law are designed to:

- Address risks specifically created by AI applications;

- Propose a list of high-risk applications;

- Set clear requirements for AI systems for high-risk applications;

- Define specific obligations for AI users and providers of high-risk applications;

- Propose a conformity assessment before the AI system is put into service or placed on the market;

- Propose enforcement after such an AI system is placed in the market;

- Propose a governance structure at the European and national levels.

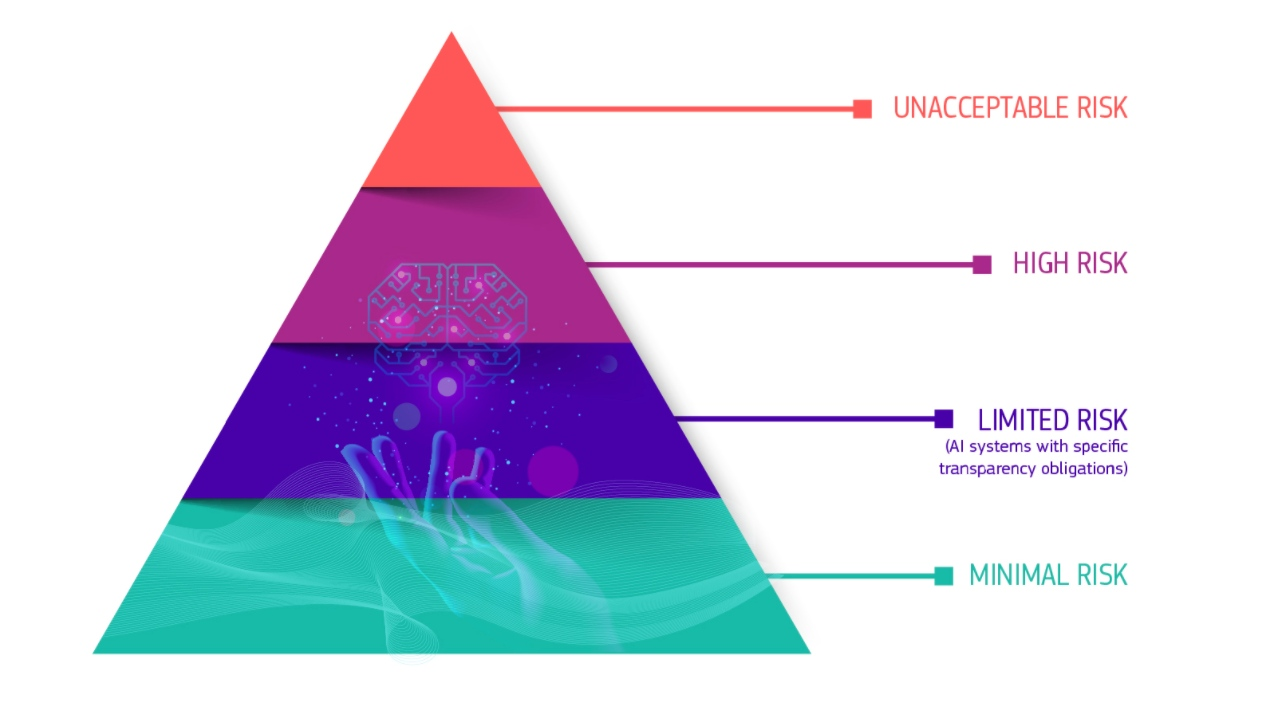

The European Parliament took a risk-based approach to the regulation. They defined four risk categories, outlined using a pyramid model as shown below.

These four levels are defined as:

- Unacceptable Risk – This level refers to any AI systems that the EU considers a clear threat to the safety, livelihoods, and rights of EU citizens. Two examples are social scoring by governments – which appears to ban one of the common uses of AI in China – and toys that use voice assistance to encourage dangerous behavior.

- High Risk – AI systems marked as high risk are those that may operate within use cases that are crucial to society. This can include AI used in education access, employment practices, law enforcement, border control and immigration, critical infrastructure, education access, and other situations where someone’s rights might be infringed.

- Limited Risk – This category refers to AI applications with specific transparency obligations. Think of a chatbot on a website. In that specific case, consumers should be aware that they’re interacting with a software program or a machine, so they can make informed decisions on whether to engage or not.

- Minimal Risk – Also called “no risk,” these are AI systems used in media like video games or AI-enabled email spam filters. This appears to be the bulk of AI in the EU today.

High-risk AI systems have specific, strict rules that they must comply with before they’re able to go on the market. According to the EU’s write-up on the AI Act, high-risk systems follow these rules:

- Adequate risk assessment and mitigation systems;

- High quality of the datasets feeding the system to minimize risks and discriminatory outcomes;

- Logging of activity to ensure traceability of results;

- Detailed documentation providing all information necessary on the system and its purpose for authorities to assess its compliance;

- Clear and adequate information to the user;

- Appropriate human oversight measures to minimize risk;

- High level of robustness, security, and accuracy.

All remote biometric systems, for example, are considered high-risk. The use of remote biometrics in public spaces for identification (e.g., facial recognition) in law enforcement will be prohibited under this act.

The AI Act establishes a legal framework for reviewing and approving high-risk AI applications, aiming to protect citizens’ rights, minimize bias in algorithms, and control negative AI impacts.

What does the EU AI Act mean for third-party risk management programs?

Companies located in the EU or those doing business with EU organizations must be aware of and comply with the laws after the applicable notice period. Given the expansive definition of “high risk” in the law, it may make sense to ask vendors and suppliers more concrete questions about how they’re using AI and how they’re following the other relevant regulations.

Organizations should also thoroughly examine their own AI implementation practices. Other European technology laws still apply, so organizations needing GDPR compliance should also explore ways to integrate AI Act compliance into their workflow.

Artificial Intelligence Governance in the United States

While there are no official federal AI regulations in the United States today, standards bodies have published extensive guidance in the form of governance frameworks and the current administration recently released a memorandum on federal use of AI, focused on innovation, governance, and public trust. Meanwhile, numerous jurisdictions nationwide have proposed and enacted laws addressing multiple AI use cases.

The National Institute of Standards and Technology (NIST) AI Risk Management Framework (AI RMF), introduced in January 2023, provides a methodology for crafting an AI governance strategy within your organization. Following this overall framework, on July 26, 2024, NIST published NIST-AI-600-1, Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile. This profile drills down on the distinct risks associated with generative AI and highlights recommended actions for managing those risks in alignment with the AI RMF.

The NIST AI RMF and Third-Party Risk Management

The NIST AI framework offers guidance on crafting an AI governance strategy in your organization. The RMF is divided into two parts. Part 1 includes an overview of the risks and characteristics of what NIST refers to as “trustworthy AI systems.” Part 2 describes four functions to help organizations address the risks of AI systems: Govern, Map, Measure, and Manage. The illustration below reviews the four functions.

The functions in the NIST AI risk management framework.

Organizations should consider risk management principles to minimize the potential negative impacts of AI systems, such as hallucination, data privacy, and threats to civil rights. This consideration also extends to the use of third-party AI systems or third-parties’ use of AI systems. Potential risks of third-party misuse of AI include:

- Security vulnerabilities in the AI application itself. Without the proper governance and safeguards in place, your organization could be exposed to system or data compromise.

- Lack of transparency in methodologies or measurements of AI risk. Deficiencies in measurement and reporting could result in underestimating the impact of potential AI risks.

- AI security policies that are inconsistent with other existing risk management procedures. Inconsistency results in complicated and time-intensive audits that could introduce potential negative legal or compliance outcomes.

According to NIST, the RMF will help organizations overcome these potential risks.

State-Based AI Governance

In lieu of federal legislation, many individual states are implementing regulations around artificial intelligence. Other states, such as Massachusetts, have clarified how their existing laws apply to developers, suppliers, and users of artificial intelligence. Recently enacted AI governance state laws include Utah’s AI Policy Act, which went into effect May 2024, Colorado’s AI Act (effective February 2026), and California laws AB 2013 and SB 942 (effective January 2026). These laws mandate greater transparency in how AI systems are developed and used, require clear consumer disclosures, and explicitly prohibit algorithmic discrimination.

A few common themes include:

- Transparency & Clear Disclosures: Verify that our vendors clearly disclose AI use and are upfront with consumers about significant AI-driven decisions.

- Fairness & Non-Discrimination: Assess whether vendors regularly test for and address algorithmic biases.

- Documentation & Impact Assessments: Require detailed compliance documentation, including training datasets, impact assessments, and consumer protection practices.

- Data Protection: Emphasize security controls vendors must implement to protect personal information.

- Regulatory Alignment: Encourage vendors to align with recognized standards (like the NIST AI Risk Management Framework) to support compliance.

Current State Regulations May Apply to Artificial Intelligence

On April 16, 2024, the Massachusetts Attorney General clarified that existing consumer protection laws apply to AI. The guidance warns against false claims about AI systems’ capabilities, reliability, or safety and reinforces compliance with privacy and anti-discrimination laws. It also highlights disclosure requirements under the Equal Credit Opportunity Act when AI is used in credit decisions. Third-party risk managers should expect similar enforcement trends across other states and ensure vendors’ AI tools meet legal and ethical standards.

Risk management teams should verify that third-party vendors clearly disclose when customers interact with AI, regularly test their systems for biases, and comply with strict consumer protection and data security rules. Leveraging standards like the NIST AI Risk Management Framework can help mitigate liability risks under these new regulations.

The United Kingdom’s Artificial Intelligence Regulation Bill

In the United Kingdom, Lord Holmes of Richmond introduced an AI Regulation Bill in the House of Lords. This is the second bill introduced in Parliament designed to regulate the usage of artificial intelligence in the UK. The initial bill, addressing both AI and worker’s rights, was presented in the House of Commons towards the conclusion of the 2022 to 2023 legislative session but was discontinued in May 2023 due to the session’s conclusion.

This new AI bill, introduced in November 2023, is broader in focus. Lord Holmes introduced the regulation to put some guardrails around AI development and define who would be responsible for defining future legislative restrictions on AI in the United Kingdom.

There are a few key features of the bill, which had its first reading in the House of Lords on November 22, 2023. These include:

- The creation of an AI Authority tasked with the primary responsibility of ensuring a cohesive approach to artificial intelligence across the UK government. It’s also in charge of taking a forward-looking approach to AI and ensuring that any future regulatory framework aligns with international frameworks.

- The definition of key regulatory principles, which puts guardrails around the regulations that the proposed AI Authority can and should create. According to the bill, any AI regulations put in place must adhere to the principles of transparency, accountability, governance, safety, security, fairness, and contestability. The bill also notes that AI applications should comply with equalities legislation, be inclusive by design, and meet the needs of “lower socio-economic classes, older people, and disabled people.”

- Defining the need to establish regulatory sandboxes that enable regulators and businesses to work together for effectively testing new applications of artificial intelligence. These “sandboxes” may also offer companies a way to understand and pinpoint appropriate consumer safeguards to do business in the UK.

- Advocating for AI Responsible Officers in each company seeking to do business in the UK. The role of this officer is to ensure that any applications of AI in the company are as unbiased and ethical as possible, while also ensuring that the data used in AI remains unbiased.

- Guidelines for transparency, IP obligations, and labelling, stipulating that companies utilizing AI provide a record of all third-party data used to train their AI model, comply with all relevant IP and copyright laws, and clearly label their software as using AI. This component also grants consumers the right to opt out of having their data used in training AI models.

This proposed regulation is still in the early phases of negotiations. It could take a very different form after the second reading in the House of Lords, followed by a subsequent reading in the House of Commons.

Depending on how much of the bill survives the legislative process, it could have a substantial impact on how AI is used in the UK, how models are trained, and the transparency of the broader data-gathering process. Each of these areas has a direct impact on third-party vendor or supplier usage of AI technologies.

The Artificial Intelligence and Data Act (Canada)

In June 2022, the government of Canada began consideration of the Artificial Intelligence and Data Act (AIDA) aas part of Bill C-27, the Digital Charter Implementation Act, 2022. The larger C-27 bill is designed to modernize existing privacy and digital law. It includes three sub-acts: the Consumer Privacy Protection Act, the Artificial Intelligence and Data Act, and the Personal Information and Data Protection Tribunal Act.

The AIDA’s main goal is to add consistency to AI regulations throughout Canada. There are a few regulatory gaps identified in the companion document of the Act, such as:

- Mechanisms such as human rights commissions provide redress in cases of discrimination. However, individuals subject to AI bias may never be aware that it has occurred.

- Given the wide range of uses of AI systems throughout the economy, many sensitive use cases do not fall under existing sectoral regulators.

- Minimum standards, greater coordination, and expertise are needed to ensure consistent protections for Canadians across use contexts.

The act is currently under discussion, and the Canadian government anticipates it will take approximately two years for the law to pass and be implemented. There were six core principles identified in the companion document to AIDA, outlined in the table below:

| Guiding Principle | How AIDA Describes It | What It Could Mean for TPRM |

| Human Oversight & Monitoring | Human Oversight means that high-impact AI systems must be designed and developed to enable people managing the system’s operations to exercise meaningful oversight. This includes a level of interpretability appropriate to the context.

Monitoring, through measurement and assessment of high-impact AI systems and their output, is critical in supporting effective human oversight. |

Vendors and suppliers must establish easily measurable methods to monitor AI usage in their products and workflows.

Following potential passage of the AIDA, organizations will need to understand how their third parties monitor AI usage and incorporate AI into their broader governance and oversight policies. Another way to ensure more thorough human oversight and monitoring is to build human reviews into reporting workflows to check for accuracy and bias. |

| Transparency | Transparency means providing the public with appropriate information about how high-impact AI systems are being used.

The information provided should be sufficient to allow the public to understand the capabilities, limitations, and potential impacts of the systems. |

Organizations should be asking their vendors and suppliers about how they’re using AI and what sort of data is included in the models. Be aware of how this is integrated as well. |

| Fairness and Equity | Fairness and Equity means building high-impact AI systems with an awareness of the potential for discriminatory outcomes.

Appropriate actions must be taken to mitigate discriminatory outcomes for individuals and groups. |

Organizations should inquire how their third parties are controlling for potential bias in their AI usage.

There might be an additional impact here in terms of net new ESG regulations. |

| Safety | Safety means that high-impact AI systems must be proactively assessed to identify harms that could result from use of the system, including through reasonably foreseeable misuse.

Measures must be taken to mitigate the risk of harm. |

AIDA may introduce new regulations regarding data usage in the context of AI.

Expect new security requirements in AI tooling, and make sure that current and prospective vendors answer questions about the security of their AI usage – including basic controls such as data security, asset management, and identity and access management. |

| Accountability | Accountability means that organizations must put in place governance mechanisms needed to ensure compliance with all legal obligations of high-impact AI systems in the context in which they will be used.

This includes the proactive documentation of policies, processes, and measures implemented. |

New regulations are likely, prompting companies to inquire with third parties about compliance with any emerging reporting requirements and mandates. |

| Validity & Robustness | Validity means a high-impact AI system performs consistently with intended objectives.

Robustness means a high-impact AI system is stable and resilient in a variety of circumstances. |

Organizations should ask their third parties about any validity issues with AI models in their operations, a concern that may be relevant to technology vendors and potentially extend to the physical supply chain. |

Ultimately, the Canadian government is taking a hard look at how to regulate AI usage nationwide. There will be new mandates and laws to comply with no matter what. So, it makes sense for companies doing business in Canada or working with Canadian companies to understand any upcoming requirements as AIDA comes closer to passage.

Final Thoughts: AI Regulations and Third-Party Risk Management

Governments worldwide are actively debating how to regulate artificial intelligence technology and its development. Regulatory discussions have focused on specific use cases identified as potentially the most impactful on a societal level, suggesting that AI laws will address a combination of privacy, security, and ESG concerns.

We’re quickly seeing how organizations worldwide must adapt their third-party risk management programs to AI technology. Companies are rapidly integrating AI into their operations, and governments will respond in kind. At this point, adopting a more cautious and considerate approach to AI in operations and asking questions of vendors and suppliers is the correct choice for third-party risk managers.

For more on how Mitratech incorporates AI technologies into our Third-Party Risk Management Solutions to ensure transparency, governance, and security, download the white paper, How to Harness the Power of AI in Third-Party Risk Management or request a demonstration today.

Editor’s Note: This post was originally published on Prevalent.net. In October 2024, Mitratech acquired the AI-enabled third-party risk management, Prevalent. The content has since been updated to include information aligned with our product offerings, regulatory changes, and compliance.